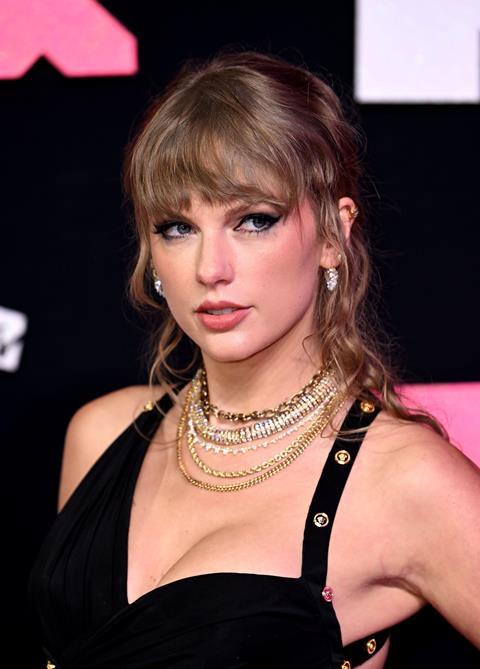

Taylor Swift’s globally recognised face was recently superimposed onto pornographic pictures. And as advertising copywriter Lizzie Hutchison rightly warns, if it can happen to her, it can happen to anyone.

A few months ago a mate and I were mucking about at work, coming up with ads for fake products. Our favourite was a massage oil called ‘Consensual’. The tagline? ‘For when she’s absolutely, definitely said yes!’

But in light of recent deepfake stories, this doesn’t seem so funny. A few weeks ago, Taylor Swift had her face superimposed onto pornographic images, and shared on X - with one photo racking up 47m views before it got removed. Grim.

I went to Parliament (suprised they let me in with my Barbie phone case tbh) to watch a screening of ‘My Blonde GF’, a documentary short film that tells Helen Mort‘s experience with deepfake and image based online abuse. What struck me was how interlinked power and consent are.

Having images produced without her permission, using her face and a pornstar’s body left Helen feeling violated,

Having images produced without her permission, using her face and a pornstar’s body left Helen feeling violated, experiencing nightmares and an overwhelming sense that she was owned by the public. So someone, somewhere in a dark room with access to AI and photoshop has successfully turned her into a commodity. Internet property.

It’s a tale of stark contrasts. Power v powerless. Anonymity v ubiquity. Control v controlled. Appearance v reality. Deepfakes question what we assume to be authentic, blurring the boundaries between fact and fiction. And of course it’s all disgustingly unacceptable.

It’s not hard to see which side christians should be on. But how do we actually help the situation? Well, prayer. Empathy for the victims, and encouaging the government to enforce stricter laws around this whole area. Currently the Online Safety Bill mandates that it’s illegal to distribute deepfakes, but not to actually make them. Room for improvement there.

Currently the Online Safety Bill mandates that it’s illegal to distribute deepfakes, but not to actually make them.

Jesus gave a voice to women at a time when their testimony wasn’t even valid in court. I think he’d be horrified to learn that 96% of deepfakes are non-consensual pornorgraphy featuring women.

Degrading, bullying, exploiting. Rosie Morris, the director of My Blonde GF, expressed a concern that not only is this humiliating for the individual victims, but creating copious amounts of violent pornography dangerously impacts how men view women more broadly. Is this generation as empowered and forward-thinking as we like to believe? Or are people in 2024 actually adopting the attitude of AD 24?

Read more on AI

AI claims to have created the ‘perfect’ man and woman… but are the robots playing God?

Artificial Intelligence girlfriends could be ruining our ability to connect… this is not God’s plan

Thanks to the wonders of technology, it’s now possible to sexually violate someone without coming into contact with them. Perhaps a better use of AI would be to train it to recognise deepfakes and alert the authorities when one is being generated. To up the ante, the perpetrator should forfeit the right to anonymity - experiencing the same level of exposure that their victims would otherwise be subject to.

Consent is powerful. Be that digital or physical. And, if you have to fake it. You’re not doing it right.

No comments yet